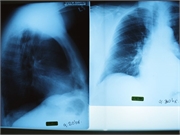

Models developed to detect pneumothorax, opacity, nodule or mass, fracture on frontal chest radiographs

WEDNESDAY, Dec. 4, 2019 (HealthDay News) — Deep learning models can be used for interpretation of chest radiographs, according to a study published online Dec. 3 in Radiology.

Anna Majkowska, Ph.D., from Google Health in Mountain View, California, and colleagues developed deep learning models to detect four findings (pneumothorax, opacity, nodule or mass, and fracture) on frontal chest radiographs using two data sets. Data set 1 (DS1) included 759,611 images from a multicity hospital network and ChestX-ray14 included 112,120 images from a publicly available data set. Labels were provided for 657,954 training images using natural language processing and expert review. Test sets included 1,818 images from DS1 and 1,962 images from ChestX-ray14. Performance was assessed by area under the receiver operating characteristic curve analysis; for performance comparison, four radiologists reviewed test set images.

The researchers found that for pneumothorax, nodule or mass, airspace opacity, and fracture, the population-adjusted areas under the receiver operating characteristic curve were 0.95, 0.72, 0.91, and 0.86, respectively, in DS1. The corresponding areas under the receiver operating characteristic curves in the ChestX-ray14 data set were 0.94, 0.91, 0.94, and 0.81.

“We developed and evaluated clinically relevant artificial intelligence models for chest radiograph interpretation that performed similar to radiologists by using a diverse set of images,” the authors write.

Several authors disclosed financial ties to Google.

Copyright © 2019 HealthDay. All rights reserved.